The line between AI applications and AI infrastructure is disappearing. A decade ago, a SaaS founder could focus on product-market fit and let cloud platforms handle the technical plumbing. But today, application-layer AI companies can’t afford to be so hands-off. To win, they need deep technical expertise in-house—engineers who understand the intricacies of AI models, know how to experiment and iterate quickly, and can abstract that complexity away for their users.

The future of the AI application layer won’t be led by no-code builders or thin wrappers. It will be led by deeply technical teams that scale like infrastructure companies, even if they deliver products to end-users. This shift is happening faster than most realize, and it’s redefining what a successful AI startup looks like.

The Blistering Pace of AI Model Development

AI is evolving at breakneck speed. In just the past 3 months, we’ve seen the release of Anthropic’s Claude 4 Series (Opus and Sonnet), and in the OSS world we’ve seen Kimi K2, GLM 4.5 by Z.ai, Qwen 3, Arcee Foundation Model, and more — each pushing the boundaries of what’s possible and forcing application teams to adapt in real time. Between now and the end of the year, we’re also expecting OpenAI’s GPT-5, Meta’s “Behemoth” series, and other major model drops.

Each new drop offers new capabilities and forces application teams to rethink what’s possible. This constant change puts pressure on builders to move fast. A product built on last quarter’s model can feel outdated if a competitor launches something better a few weeks later.

User expectations are evolving just as fast. Every new model release resets the bar for what people expect from AI products: smarter responses, faster speeds, more intuitive experiences. What felt cutting-edge last quarter can feel obsolete today.

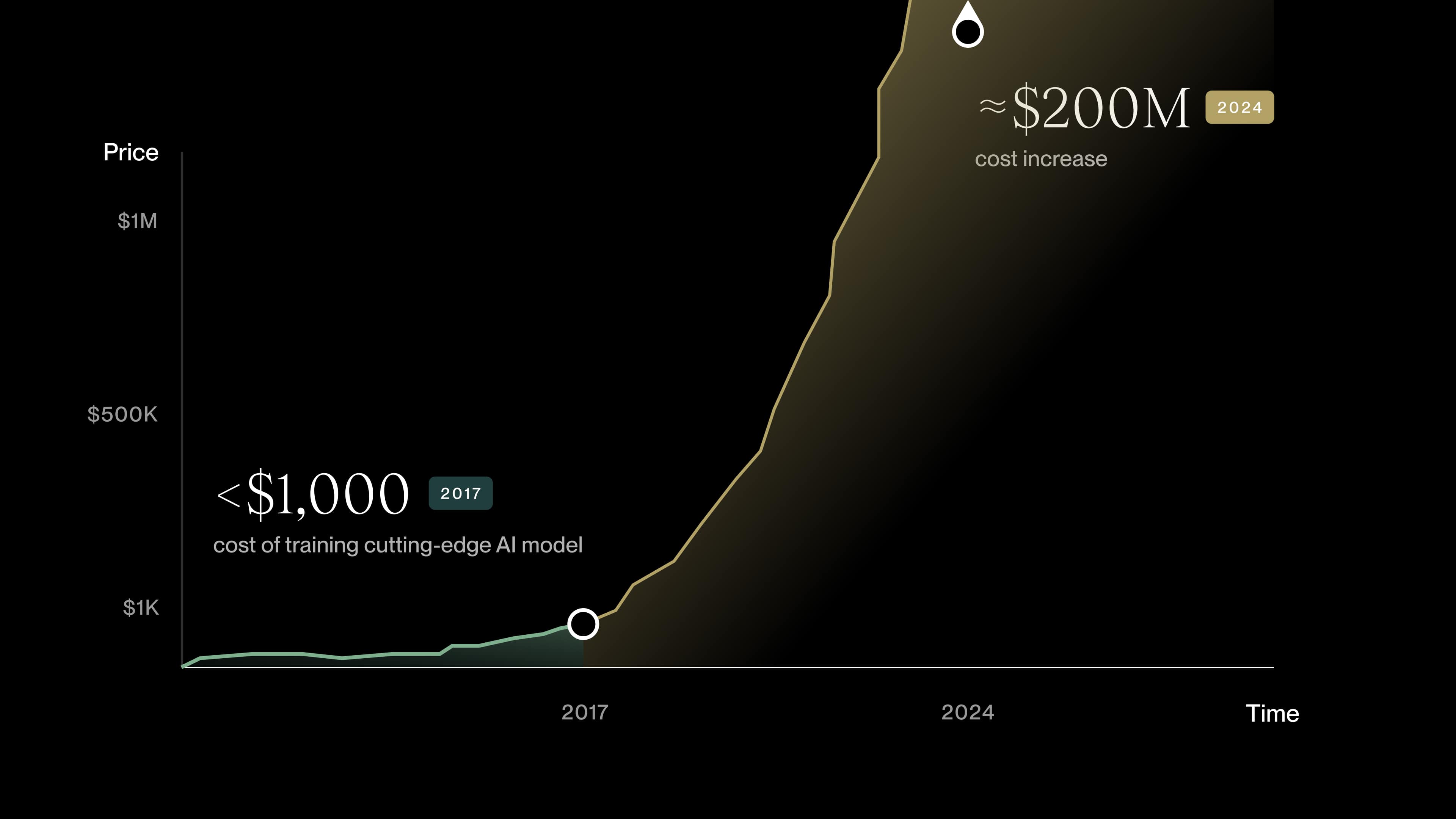

And the cost to train frontier models is exploding. OpenAI reportedly spent over $100 million to train GPT-4, while Google’s Gemini Ultra is estimated to have cost nearly $200 million. That level of capital investment signals just how rapidly the model layer is evolving—and how high the stakes have become. The AI application teams that stay closest to the metal, adapting product capabilities based on what the frontier unlocks, will be the ones best positioned to win in this dynamic landscape.

Most importantly, LLMs represent the new paradigm for processing text, audio and even video for developers. This new paradigm involves developers calling backends that are inherently nondeterministic and unstructured. Developers who spend time understanding how models behave and building internal infrastructure around these behaviors (e.g., context management, prompting best practices, evals, etc) are best positioned to deliver production-ready applications.

That means successful AI teams don’t just consume models. They study them, test them, and adapt quickly. They treat model selection and usage like product decisions and maintain a roadmap that moves as fast as the research frontier. That level of sophistication is quickly becoming table stakes.

Today’s Apps, Tomorrow’s Infra

This is why so many of the best application companies are starting to look like infrastructure teams under the hood. To differentiate, they didn’t just rely on OpenAI’s APIs. They’ve improved and built out infrastructure to help navigate between models and run better evaluations. In the near future, some will even build their own custom models optimized for their domains. We can expect a future where infrastructure-level investment in service of a better app experience is the norm, not theoretical.

We’re seeing this mindset at teams like Cline, which is pushing the boundaries of AI-assisted software development. With each new model release, Cline provides the earliest direct access to the model in-agent, while guiding users on how to get the most out of the model, while being fully aware of the model’s strengths and shortcomings.

Even more horizontal companies like Bland, which automates enterprise phone calls with realistic AI voice agents, are developing, then shipping their own infrastructure and apps to support innovation in areas like text-to-speech (TTS). Their product is seamless and simple on the surface, but only because the team invested in voice synthesis, call routing, and live agent handoffs beneath the surface. That’s what modern infrastructure looks like when it’s packaged neatly as a slick application UI.

The best AI apps today treat their models as interchangeable components. They plug in new ones quickly, test what works best, and work out each new model’s kinks before passing them on directly to their users.

Some solutions, like Bland, have an opinionated approach that routes tasks based on speed and cost, while maintaining the highest quality bar for their end customers’ customers. Others, like Cline, give more control in the hands of their end-customer developers, giving them control in model selection, while steering them towards best practices.

As AI apps and infrastructure increasingly blend, knowing where to abstract complexity and where to expose control will separate the good from the great. And building infrastructure in-house, or knowing when to go open source, will determine whether your product is a platform or a money pit.

Key Traits of Next-Gen AI App Teams

To meet these new demands, the most successful AI application teams are building like infrastructure teams from day one. Here are a few defining traits we see in the next generation of winners:

- Deep Technical Talent: These teams often include AI researchers, former ML engineers, or founders who’ve spent time at places like DeepMind, OpenAI, or academic labs. They aren’t just consuming APIs. They’re reading research papers, running model benchmarks, and experimenting with fine-tuning.

- Full-Stack R&D: From infrastructure orchestration to UX design, next-gen AI startups are full-stack by necessity. That means investing in things like GPU optimization, caching, memory management, and multi-model routing—once the domain of cloud infrastructure teams.

- Rapid Iteration: The pace of change in AI means the most successful teams have tight feedback loops between research, engineering, and product. They ship quickly, test new models often, and adapt without being overly tied to any one provider or paradigm.

- Margin Awareness: While early AI companies have often been forgiven for low margins in pursuit of growth, that window is closing. Long-term winners will design their stacks to optimize for performance and cost, balancing premium models with smaller or open-source options where possible.

Where We Go From Here

We believe the next iconic AI companies will blur the line between app and infrastructure. They’ll deliver intuitive user experiences, but those experiences will be powered by sophisticated, flexible, and efficient backends.

So if you’re building in AI, ask yourself: do you have the team DNA to adapt fast, optimize hard, and go deep technically? Are you ready to invest in the infrastructure, even if your product looks like an app?

Because in AI, the winners won’t just use the latest models. They’ll understand them better than anyone else. And they’ll build the systems that let others benefit from that power without ever needing to see the complexity underneath.

.jpg)